[Kubernetes] Kube-apiserver存储ETCD架构及源码分析

文章目录

架构分析

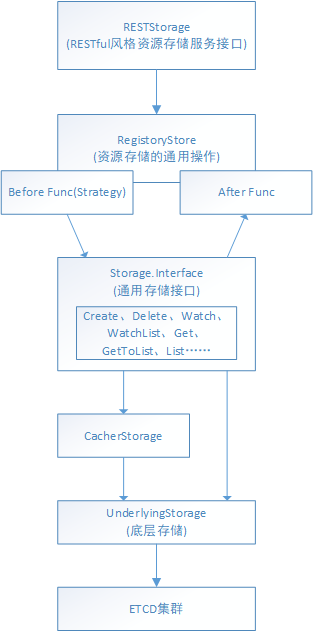

在Kubernetes中所有的数据都是存储在ETCD中,而只有kube-apiserver会和ETCD进行交互,其他组件都是通过kube-apiserver间接和ETCD通信。kube-apiserver和ETCD交互的架构如下如所示(图片来源郑东旭《Kubernetes源码分析》)。

实例分析

这里以pod类型为例,NewStore函数创建pod类型的PodStorage,这里先初始化了一个genericregistry.Store结构,这个是核心部分。

|

|

关于store的重要部分在CompleteWithOptions,这里完成了很多重要的事情,先看GetRESTOptions部分,

|

|

在GetRESTOptions中会分为两个类型的存储初始化,一种是裸存储,还一种是带cache的存储。默认kube-apiserver启动的时候watchCache功能都是true,这里我们分析带存储功能的存储。通过StorageWithCacher函数初始化存储。这里也只是一个声明,在后面将会用到。

|

|

再来详细分析cacherstorage.NewCacherFromConfig函数,先看watchCache的生成,

|

|

在newWatchCache中,完成WatchCache的初始化,并初始化本地缓存结构store,在eventHandler会将event数据写入到cacher.incoming的channel。

|

|

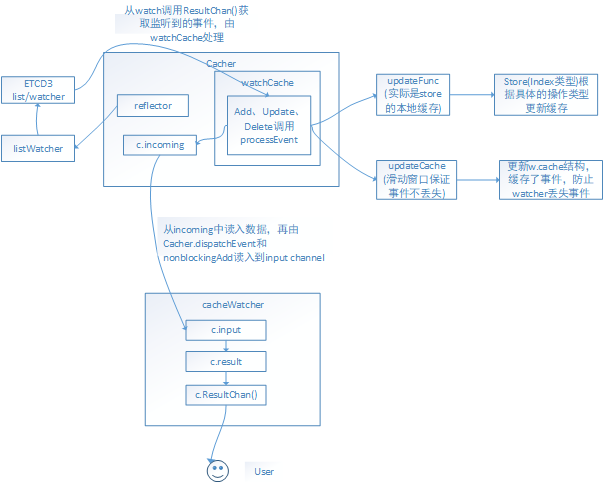

然后再初始化ReFlector,这个结构会封装上面的listWatcher和watchCache结构,这个也将直接和etcd交互。再启动cacher.dispatchEvents处理从incoming的channel中的数据,再将数据通过c.dispatchEvents进行分发,并将event发送到cacher的inputchannel。而input里面的数据将会被cacheWatcher.process消费。

在listerWatcher中初始化cacherListerWatcher这里会对etcd进行操作,

|

|

这里再看event事件到了cacher之后会通过cacher.dispatchEvents进行分发处理,从incoming的channel中获取事件,然后通过c.dispatchEvent对事件再分发,调用watcher.nonblockingAdd把事件写入input的channel。然后再会通过cacherWatcher的process进行处理,这个函数会在创建cacherWatcher的时候启动一个协程进行处理。

|

|

在cacher.startCaching中完成reflector的ListAndWatch功能,这里最终还是操作ETCD存储。

|

|

具体的逻辑图可以参考下面这个图,这里是以pod为例。

总结

这里只针对具体的cacher进行了分析,中间还有很多的过程没有分析,还需要进一步补充(比如这里对数据的写入和读取的编码部分等等),kube-apiserver的代码逻辑还是很复杂,有更深层次的理解。

文章作者 zForrest

上次更新 2020-08-18 18:33